Table of Contents

Unless noted otherwise, code is tested with Spark 2.2

Non-committal testdrive

Minimum-effort way to test-drive Spark with a Databricks tutorial (no local setup required)

Machine learning

Quora Q/A: Why are there two ML implementations in Spark?

- spark.mllib contains the original API built on top of RDDs.

- spark.ml provides higher-level API built on top of DataFrames for constructing ML pipelines.

Profiling

SO led me to a blog entry which did not work out for me, although it's said to be a platform-agnostic script - YMMV.

I base my notes on the manual process described here.

Installation

In order of how it will be used later on.

influxdb

sudo apt install influxdb

You can manage the service with

sudo service influxdb stop

sudo service influxdb start

statsd

Build or download jar from https://github.com/etsy/statsd-jvm-profiler I tested successfully with version 2.1.0.

stacktrace export utility

Download this Python script.

flamegraph

Download this Perl script.

Prepare

Set some variables:

db_user=myuser

local_ip=$(hostname -s)

port=48081

influx_uri=http://${local_ip}:$port

flaminggraph_installation=/path/to/flamegraph/

MAINCLASS=my.Mainclass

Setup influxdb: create database and password

curl -sS -X POST $influx_uri/query --data-urlencode "q=DROP DATABASE $duser" # >/dev/null curl -sS -X POST $influx_uri/query --data-urlencode "q=CREATE DATABASE $duser" # >/dev/null curl -sS -X POST $influx_uri/query --data-urlencode "q=CREATE USER $duser WITH PASSWORD '$duser' WITH ALL PRIVILEGES" # >/dev/null

Add to your submit some lines (modify as desired)

1. the db connection configuration

--conf "spark.executor.extraJavaOptions=-javaagent:statsd-jvm-profiler.jar=server=your.ip.or.hostname,port=$port,reporter=InfluxDBReporter,database=$duser,username=$duser,password=$duser,prefix=sparkapp,tagMapping=spark" \

2. the profiler jar

--jars $GEOMESA_JAR,$PROFJAR \

Profile your code

- Ensure influxdb is up and running

- submit your job

When the job has finished, dump your stacktraces:

python2.7 $flaminggraph_installation/influxdb_dump.py -o $local_ip -r $port -u profiler -p profiler -d profiler -t spark -e sparkapp -x stack_traces

You can filter/exclude specific classes by adding an option

-f /path/to/filterfile

Your filterfile must contain lines with classnames to filter, e.g.

sun.nio

Now you can create your flamegraph

perl $flaminggraph_installation/flamegraph.pl --title "$MAINCLASS" stack_traces/all_*.txt > flamegraph.svg

and open it e.g. in Firefox.

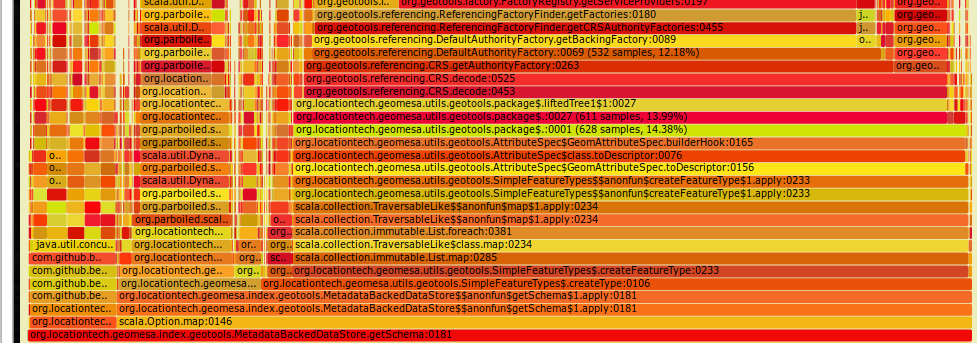

The flamegraph is interactive, you can click into a cell to investigate.

Read more here.

Submitting jobs

Providing spark jars

https://spark.apache.org/docs/latest/running-on-yarn.html#preparations

Download the required version [https://spark.apache.org/downloads.html|here].

How to setup provided jars (found here):

cd /opt/spark-2.2.0-bin-hadoop2.7/jars zip /opt/spark-2.2.0-bin-hadoop2.7/spark220-jars.zip ./* # and then copy the archive to your HDFS hdfs dfs -put /tmp/spark220-jars.zip /user/hdfs/

Then you can make use of the provided archive by adding to spark-submit

--conf spark.yarn.archive=hdfs:///user/hdfs/spark220-jars.zip

Testing

<todo>look into https://github.com/holdenk/spark-testing-base